Garbled Characters in Bing Index: When zstd Meets Search Engine Crawlers

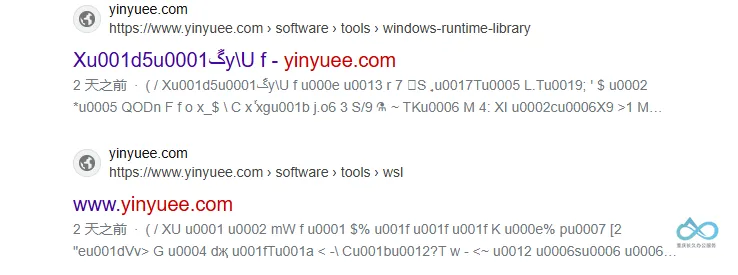

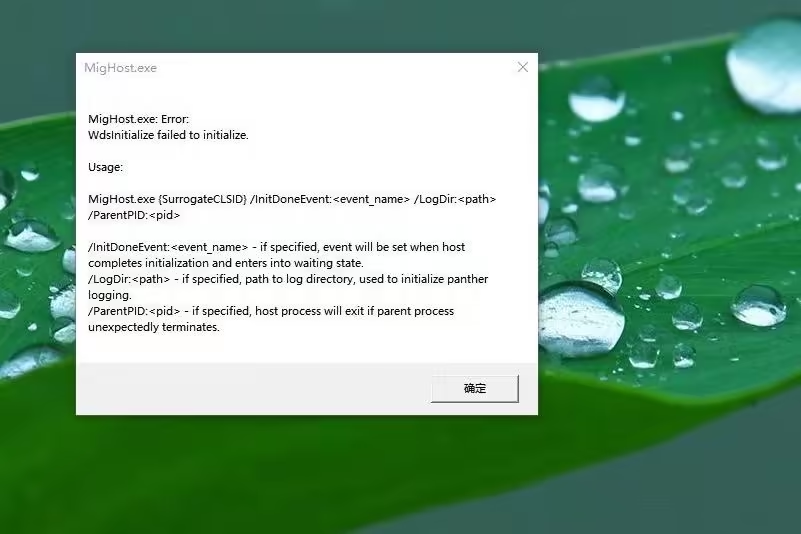

The blog enabled zstd, gzip, and brotli compression simultaneously. Since zstd achieves compression ratios similar to gzip while significantly reducing CPU usage, I set zstd as the default compression method. Compatibility measures were implemented: when browsers don't include zstd in the accept-encoding header, the system falls back to gzip or brotli. Caching also responds differently based on accept-encoding headers. However, when I searched my site recently, I discovered some pages appeared as garbled characters.

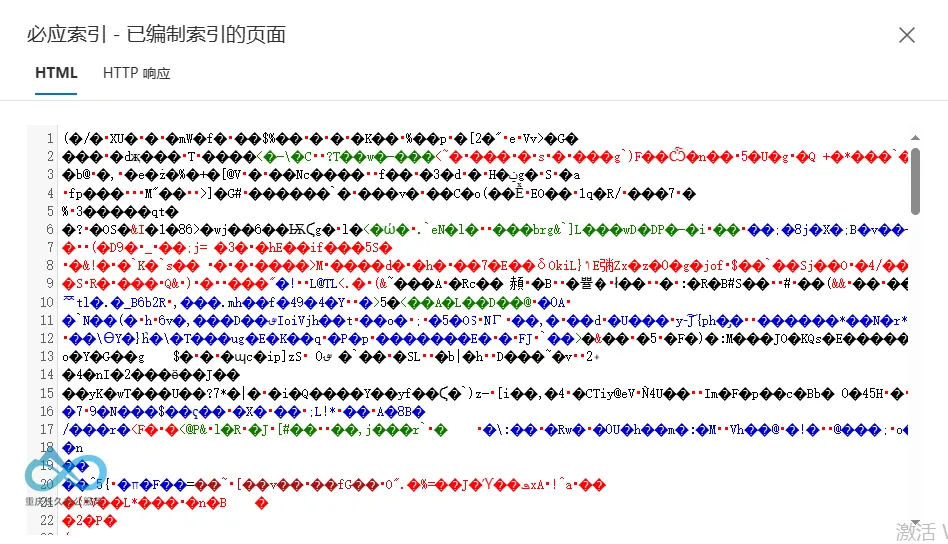

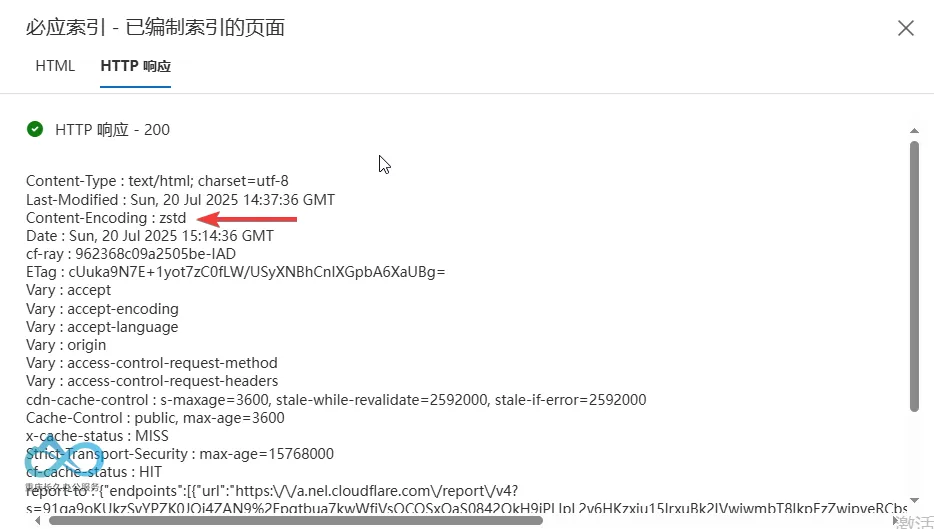

Checking Bing Webmaster Tools revealed:

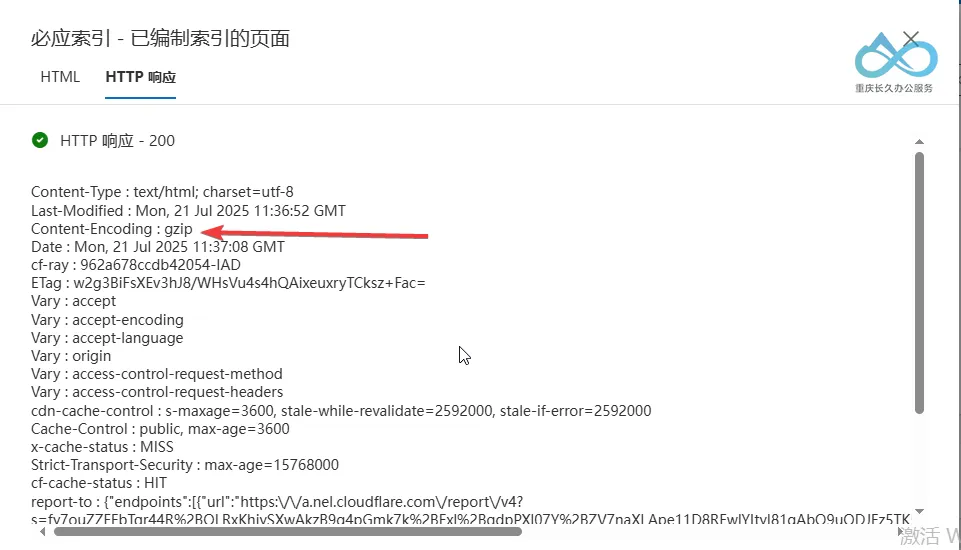

This is a normally indexed page:

In theory, if Bing's spider didn't send an accept-encoding header containing zstd, zstd compression shouldn't be used. However, I couldn't identify exactly where the failure occurred. Ultimately, I reverted to gzip as the default compression.

By the way, let's examine the compression ratios. Compression levels were set to 6 for zstd, 5 for gzip, and 4 for brotli. Article 1 contains more code blocks.

| Compression Algorithm | Article 1 | Article 2 |

|---|---|---|

| Uncompressed | 309kb | 69.6kb |

| zstd | 27.9kb | 17.2kb |

| gzip | 39.5kb | 16.8kb |

| br | 28.5kb | 17kb |

Comment